MapAnything

MapAnything is an end-to-end trained transformer model designed for metric 3D geometry reconstruction. It directly regresses scene geometry from various inputs, including images, calibration, poses, or depth data. This single feed-forward model supports over 12 distinct 3D reconstruction tasks, such as multi-image Structure-from-Motion, multi-view stereo, and monocular depth estimation.

MapAnything represents a simple, end-to-end trained transformer model developed for metric 3D reconstruction. This innovative model directly regresses the factored metric 3D geometry of a scene, accommodating a wide array of input types. Users can provide images, camera calibration parameters, poses, or existing depth data to generate detailed 3D reconstructions.

A key feature of MapAnything is its versatility and efficiency. It operates as a single feed-forward model, capable of supporting more than 12 different 3D reconstruction tasks. These tasks encompass a broad spectrum of applications, including multi-image Structure-from-Motion (SfM), multi-view stereo (MVS), monocular metric depth estimation, object registration, and depth completion. This comprehensive capability makes it a valuable tool for researchers and developers working with 3D scene understanding.

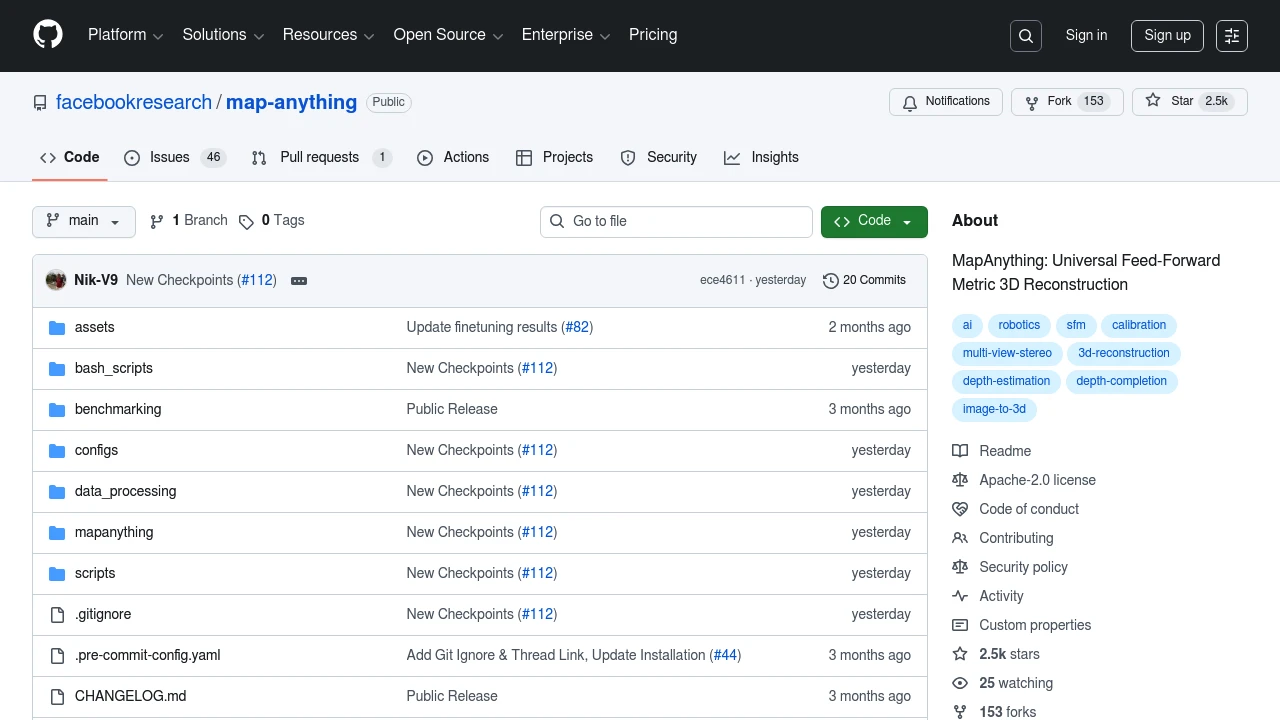

The project is hosted on GitHub by Facebook Research and Carnegie Mellon University, indicating its origin in advanced academic and industrial research. Its open-source nature allows for community contributions and further development in the field of deep learning for geospatial applications and computer vision.

Disclaimer: We do not guarantee the accuracy of this information. Our documentation of this website on Geospatial Catalog does not represent any association between Geospatial Catalog and this listing. This summary may contain errors or inaccuracies.

Comments (0)

Sign in to leave a comment